Deepfakes, Cheap Fakes and Misinformation in India

Tattle's archive service captured a 19 second video clip from a chat app. The video showed 9 notable figures singing a song. These are the last 5 seconds of the video:

Deepfakes, Cheap Fakes and Misinformation in India- A Thread (1/n)

Tattle's archive service captured a 19 second video clip from a chat app. The video showed 9 notable figures singing a song. These are the last 5 seconds of the video: pic.twitter.com/SZ6pfYzo0w — tattle (@tattlemade) August 7, 2020

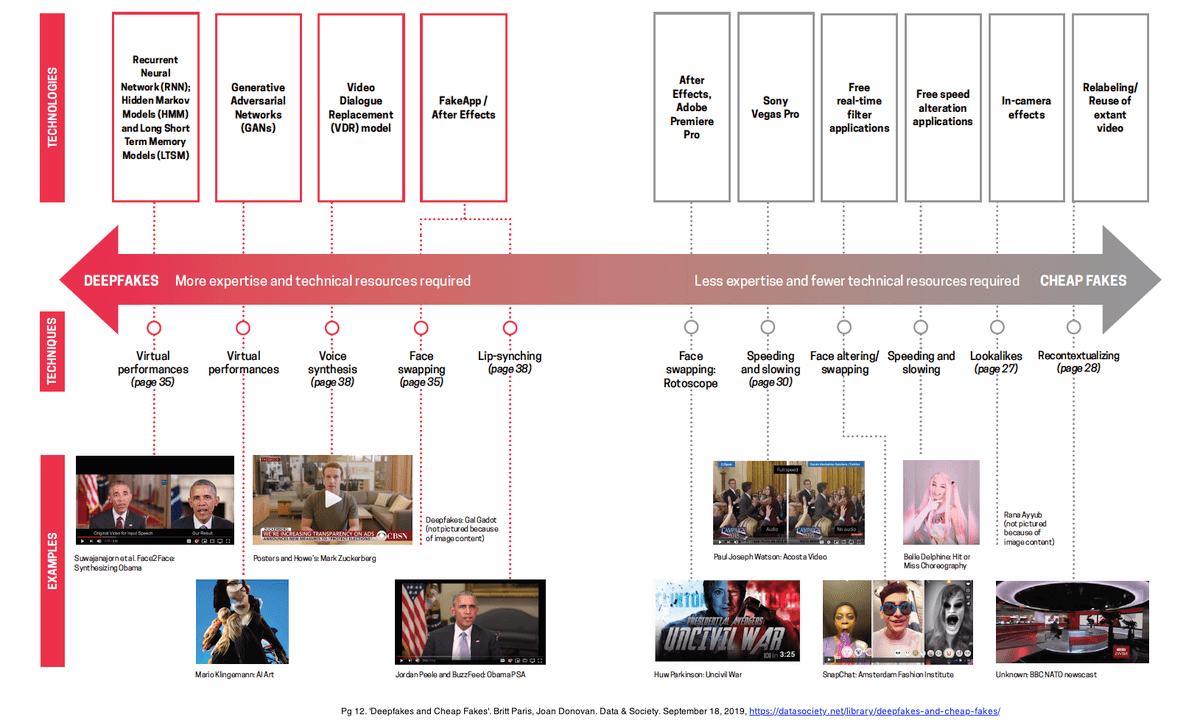

On the spectrum of 'Cheap Fakes' to Deepfakes, proposed by Joan Donovan and Britt Paris, this falls somewhere in between. It is hard to tell exactly what digital manipulation techniques were used to create this effect:

The video is not misinformation. But it shows that techniques for convincing digital manipulation, that can be used to generate misinformation, are easily accessible. The figures' mouth movements as well as head movements change with intonation of words of the song.

Any technology for audio-visual manipulation will find many applications. It will be used for everyday entertainment as in social media apps or in advertisement. It might even herald a new form of film making. Simultaneously, these sophisticated digital manipulation techniques will have serious implications for misinformation.

To the extent that misinformation thrives by increasing noise so that the cognitive load of excessive content makes it difficult for people to critically engage with posts they see, the ease of generating abundant real looking content will deepen harms from misinformation. Furthermore, it will make fact-checking a lot harder. Fact checkers sometimes struggle to trace specific videos shared on chat apps. Surroundings for example can help trace original location of a video.

Machine generated/Deepfakes will increase the volume of a specific kind of content- that which is hard to trace to a real event/person using reverse image search tools. In contrast, a 'photoshopped' post of a real world event can be tracked using reverse search. This was recently seen in the propaganda campaign covered by The Daily Beast. Some of the fake personas used profile pics that could not be found through reverse image search. The reporters concluded- photographs might have been machine generated.

Possibly, sophisticated digital forensics tools such as spectrograms would become standard in any fact checkers toolbox. More optimistically, these tools might even become abundant enough that any individual could examine if a post was in part machine generated.

This however is a cat and mouse chase. At present, deep fake detection technology is still playing catch-up. It is going to be extremely difficult to 'ban' Deepfakes, given the fast evolution of technology and versatile applications. There is a need for multi-dimensional response to the challenge.

Establishing provenance goes a long way in reducing misinformation. Specifically for Deepfakes, this could come in the form of companies declaring or watermarking every Deepfake they've generated. This won't tackle all Deepfakes, but it will reduce the size of the problem. This is an idea that has been discussed by others in greater depth:

(14/n) Shout out to @prateekwaghre who pointed out this thread that expands on this idea: https://t.co/8fVTslURdU — tattle (@tattlemade) August 7, 2020

Investing more on general media literacy is also critical. Tattle often encounters posts that contains bank details and phone numbers. Digitally manipulated content that is indistinguishable from real content will make people more vulnerable to scams.

Finally, while this is not specific to machine generated fakes, we'll have to converge on responsible ways of reporting it. We thought a while about how to share the post. We kept the copyright mark and trimmed it. Please reach out if you have better ideas.